Deep Learning and CNNs

These are the notes made by me while I was trying to implement this paper. A rigorous mathematical approach is not followed (mainly to streamline the process of note making), rather, I have noted down the concepts and the intuition behind the concepts. Mathematical analysis of the topics covered can be found here.

Use the navigation ui on the left to browse through my notes. The results of various netowrks constructed are summarized below.

Classification Networks’ Structure

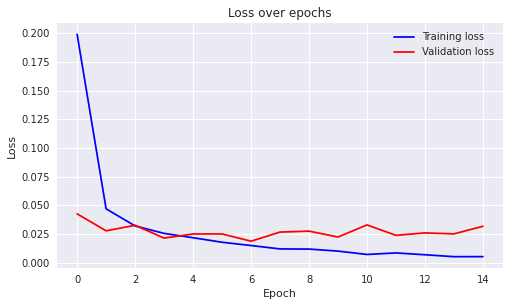

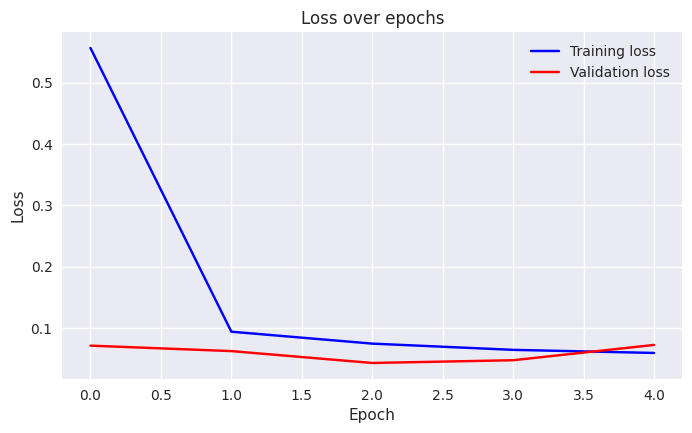

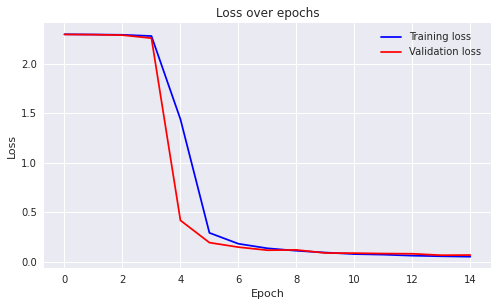

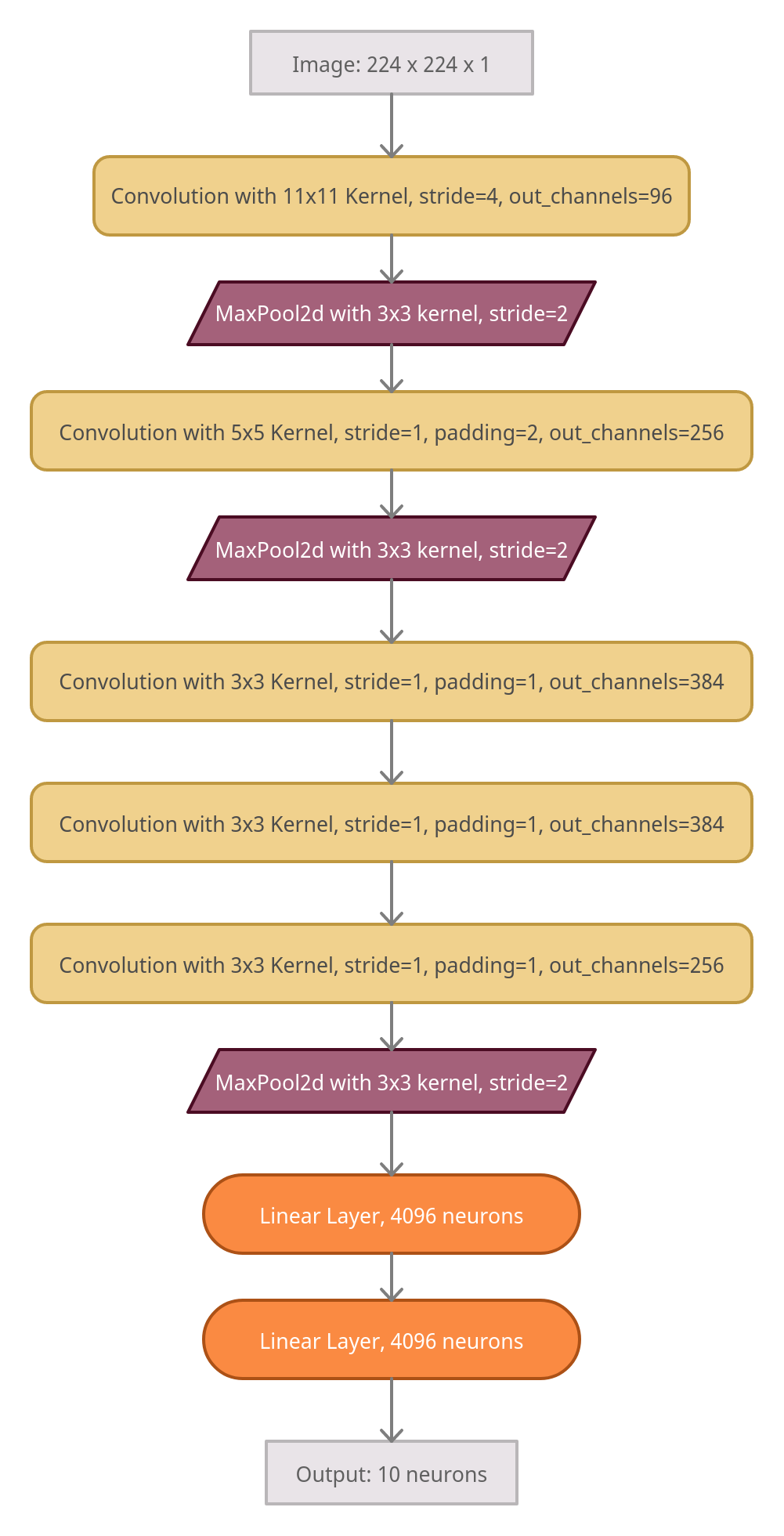

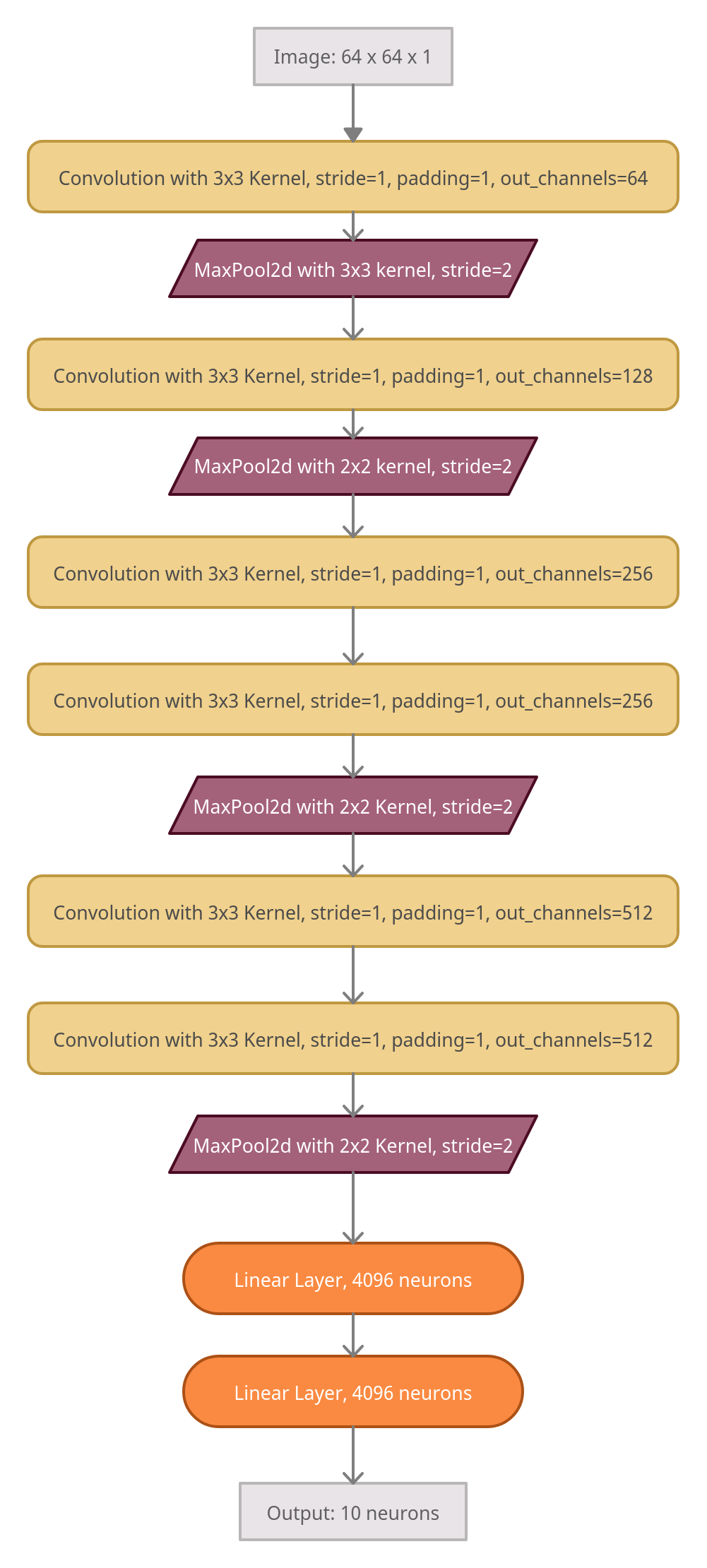

Three networks based on the structure of AlexNet, VGGNet and ResNet have been constructed for the MNIST dataset. Their architecture, and graphs comparing their loss with epochs are shown below.

ResNet’s architecture is identical to the one described in the above linked paper.

ResNet has been implemented below. You can press the button to generate two random images and classify them in real time.

(This might take upwards of 30 seconds because heroku app would need to boot up. I only did this because I thought that executing a script in a section called “Executive Summary” was funny for some reason. That’s an entire weekend that I am never getting back.)

Generative Adversial Networks’ Architecture

One GAN has been implemented for the MNIST dataset so far. The architectures of the generator and the discriminator are displayed below, along with the “Loss vs Epochs” graph obtained during the training. Do notice that this GAN needed to be trained for much longer than any of the above classification networks.

Again, you can click on the button below to obtain a random output of the network. Not all the generated images resemble a digit, but most of them do.